Real-Time Database for

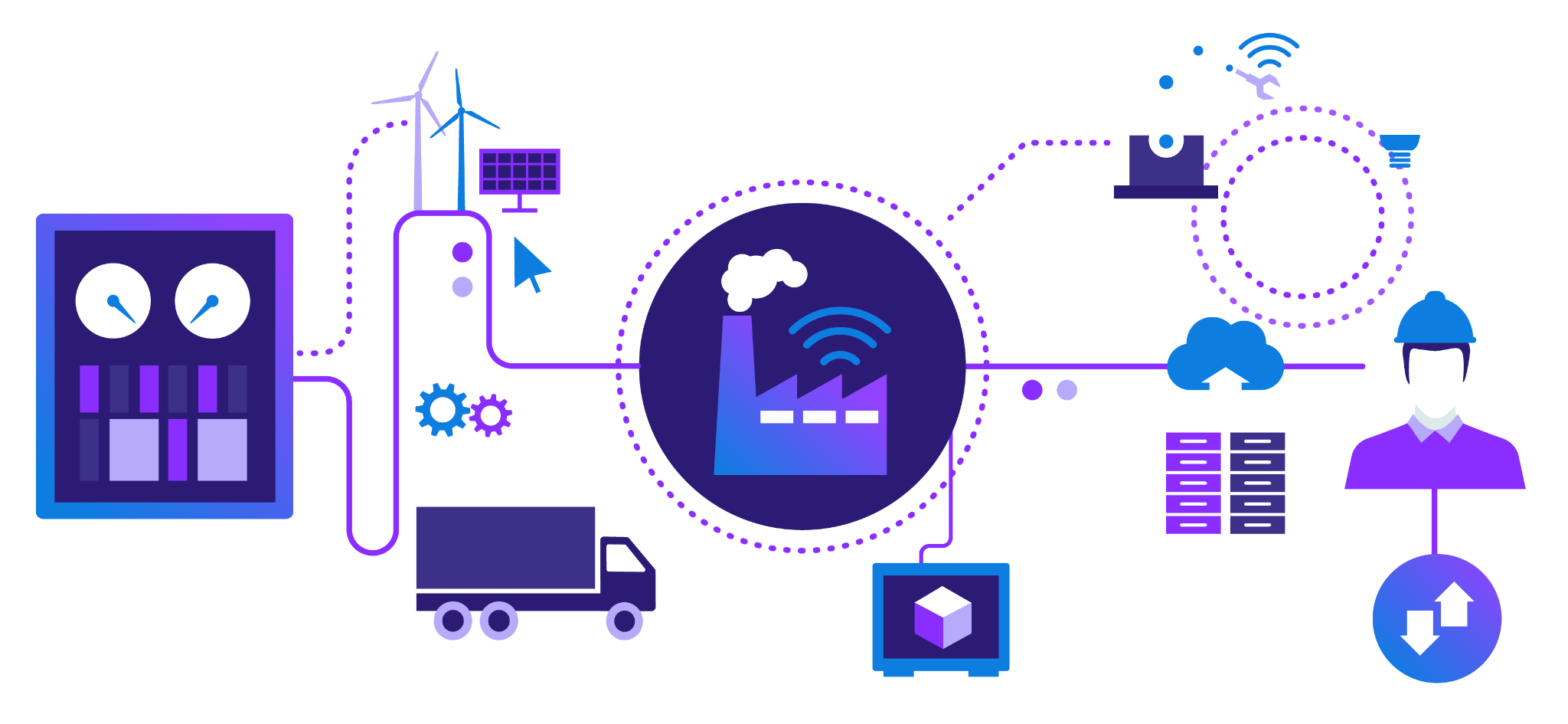

IoT Monitoring and Analytics

Explore how Apache Druid with Imply can enable your organization to analyze massive volumes of real-time data to make decisions, detect anomalies, and optimize operations with unprecedented speed and accuracy.

Trusted by developers at leading organizations

2M+

IoT events per second

<1s

Subsecond queries

50%

Reduction in database costs*

- Capabilities

Imply: A Cornerstone for IoT Solutions

What a real-time IoT environment needs

As IoT expands to more industries and use cases, data volumes are growing exponentially. Whether it’s smart thermostats or assembly line sensors, many devices generate multiple events per second—compounded across hundreds or thousands of devices, and this rate of data growth can soon overwhelm IoT environments.

In order for a database to serve as the foundation of a real-time IoT environment, it must:

- Scale with demand, storing millions of events per second or billions per hour—and accommodate fluctuations in demand

- Efficiently organize and index data—to ensure rapid retrieval of complex queries and aggregations across massive datasets

- Ingest data via streaming—and instantly make it available for analysis without first persisting it to storage

- Ensure high availability and durability through continuous data backups

Designed to solve IoT challenges

Imply is purpose built for the demands of IoT. Update data real-time to ensure data consistency, reduce storage costs, and improve data quality—which leads to more precise, efficient IoT analytics. Automatically interpolate missing data values using padding (LVCF), backfill, or linear regressions. Create and use timeseries aggregations for supercharged observability.

In addition, Imply consolidates your data and metadata in a single place, removing the need to store and sync the two types of data across separate databases while opening up opportunities for global visibility. Upserts enable users to quickly alter data or metadata ensuring data accuracy, integrity, and consistency.

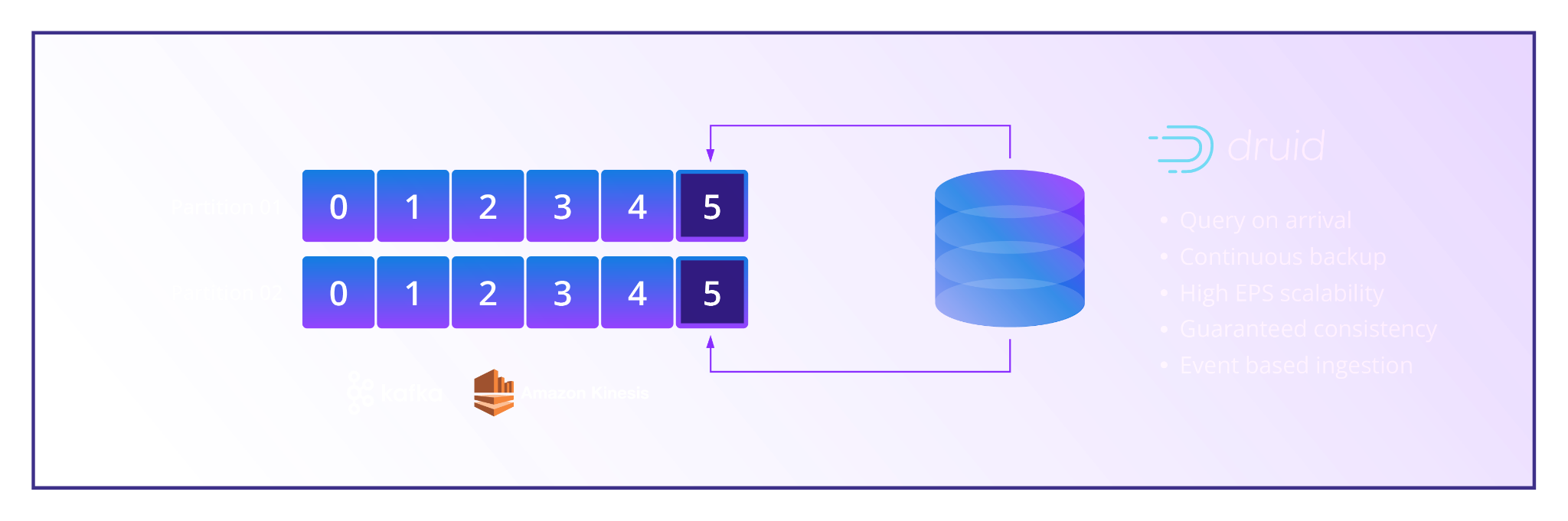

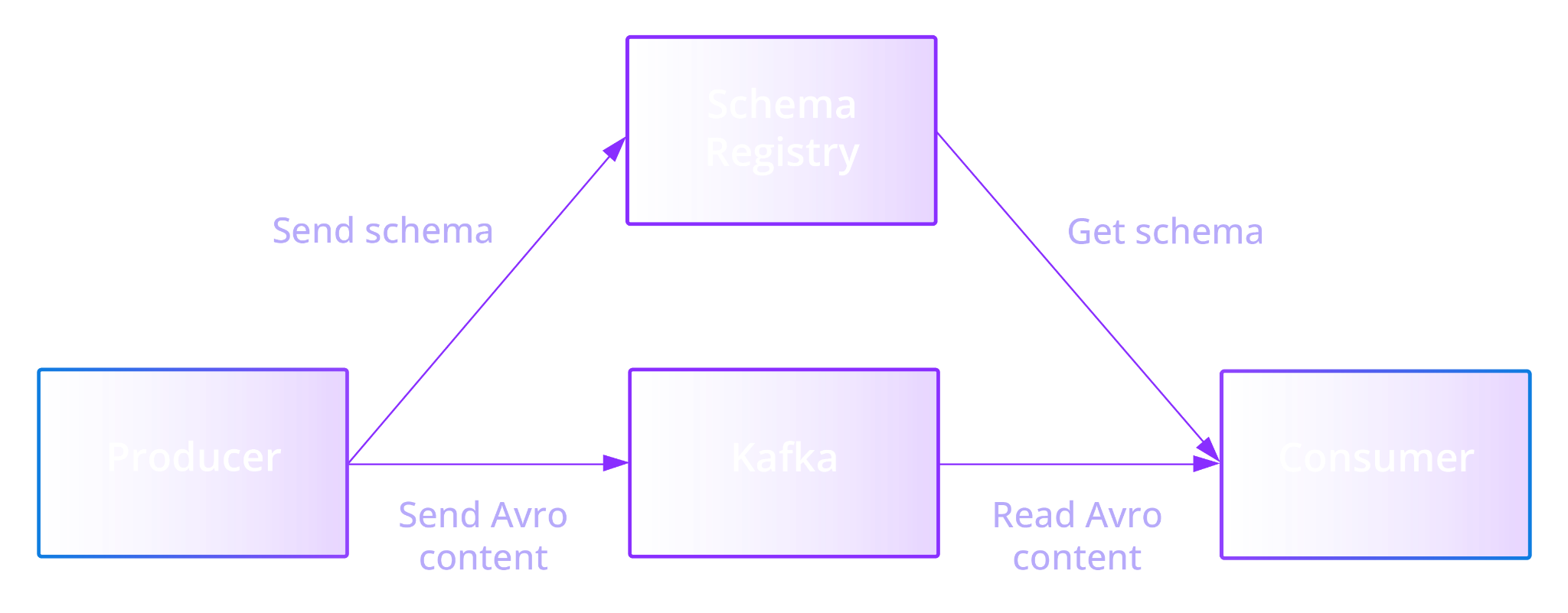

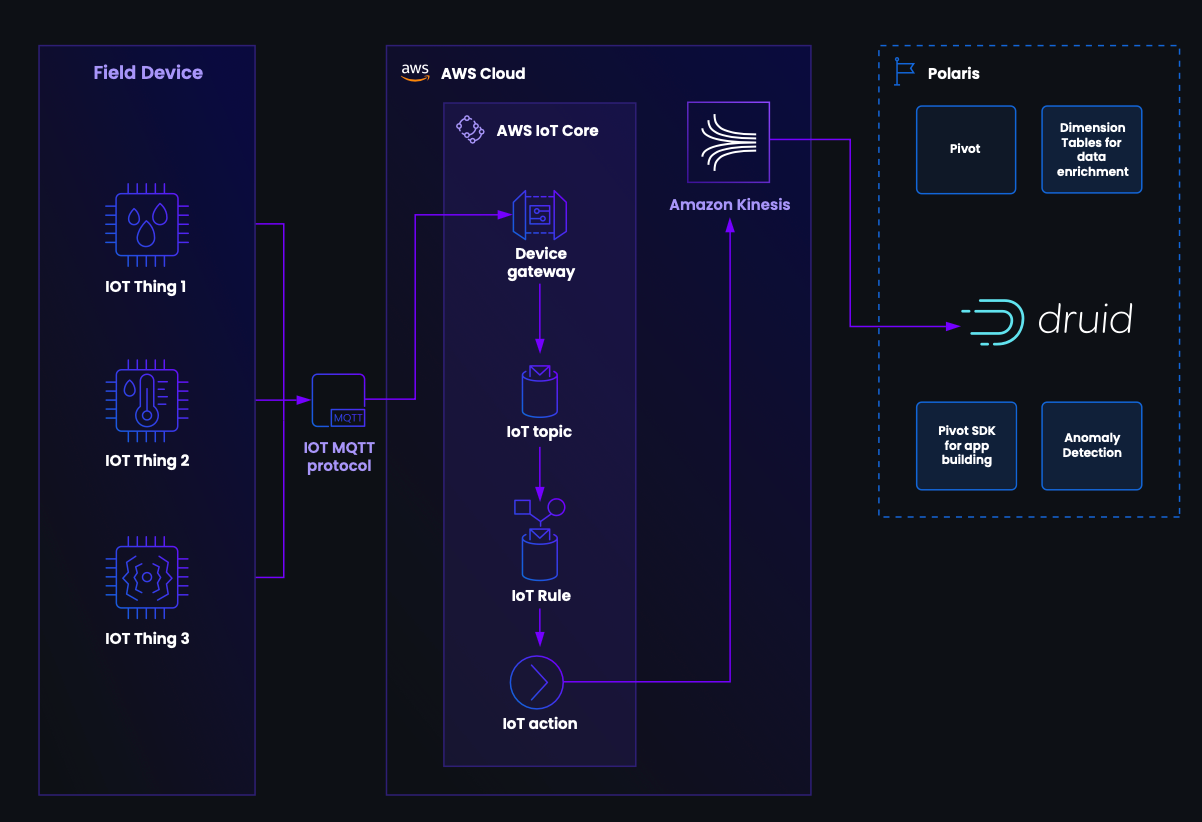

True stream ingestion

Streaming is the best way to ingest real-time data for processing, organization, and analysis. Imply is natively compatible with Amazon Kinesis and Apache Kafka (and Kafka-compatible streams, such as Confluent). That means no extra workarounds, connectors, or third-party integrations required—it just works out of the box.

Imply also includes key capabilities to optimize streaming data performance, including exactly-once ingestion to protect against duplicate data, and query-on-arrival to provide immediate access to ingested events. Every IoT event is immediately available for queries and dashboards, so your real-time data is actually available in real time.

Create and configure a Kafka topic or a Kinesis stream with just a few clicks. That way, you can get your streaming up and running in minutes, so that your team can get your insights in seconds—or less.

Ideal for time-series data

By storing data in segments partitioned by time and indexing data by timestamp, Imply includes a wide range of abilities to tackle the challenges of time-series data.

For instance, Imply can backfill late-arriving data—a key concern for organizations with devices in areas with poor connectivity. Imply customers this feature to automatically sort lagging data—reducing developer work and facilitating IoT analytics.

When sensors go offline and the flow of data is interrupted, missing data can be interpolated from the last known datapoint in the series, and padded or backfilled with the previous datapoint.

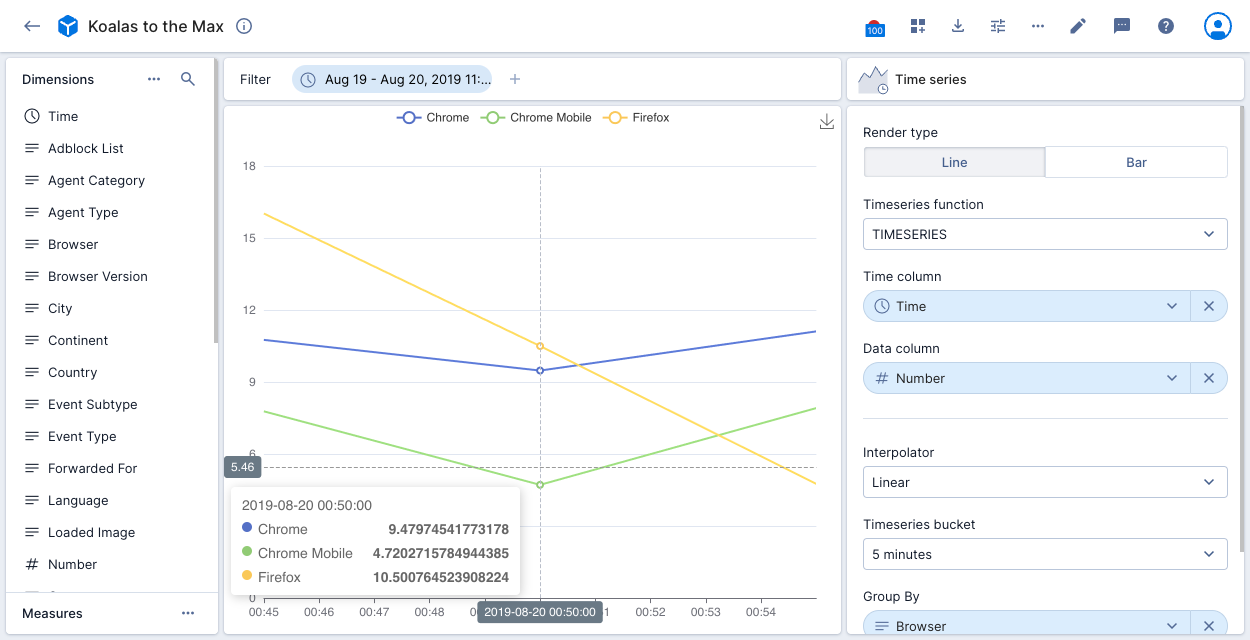

Lastly, Imply includes a number of timeseries aggregator functions. Analyze time series data, identify trends and seasonality, interpolate values, and load extra time periods to fill in boundary values. Create interactive, customizable time-series visualizations with the Imply GUI, or sort raw, timestamped data into your own time series with the latest aggregation.

Automatically work with changing data

Data schema can be diverse, even within a single IoT environment or device fleet, given factors like inconsistent firmware updates, different sensor types, or various measurements, such as temperatures, meters, or angles.

With schema autodiscovery, Imply identifies new and changed data fields and updates schemas automatically, avoiding the need for downtime or manual work, and preserving both data consistency and query performance. This provides the performance of a strongly-typed data structure alongside the ease of use afforded by a schemaless data structure.

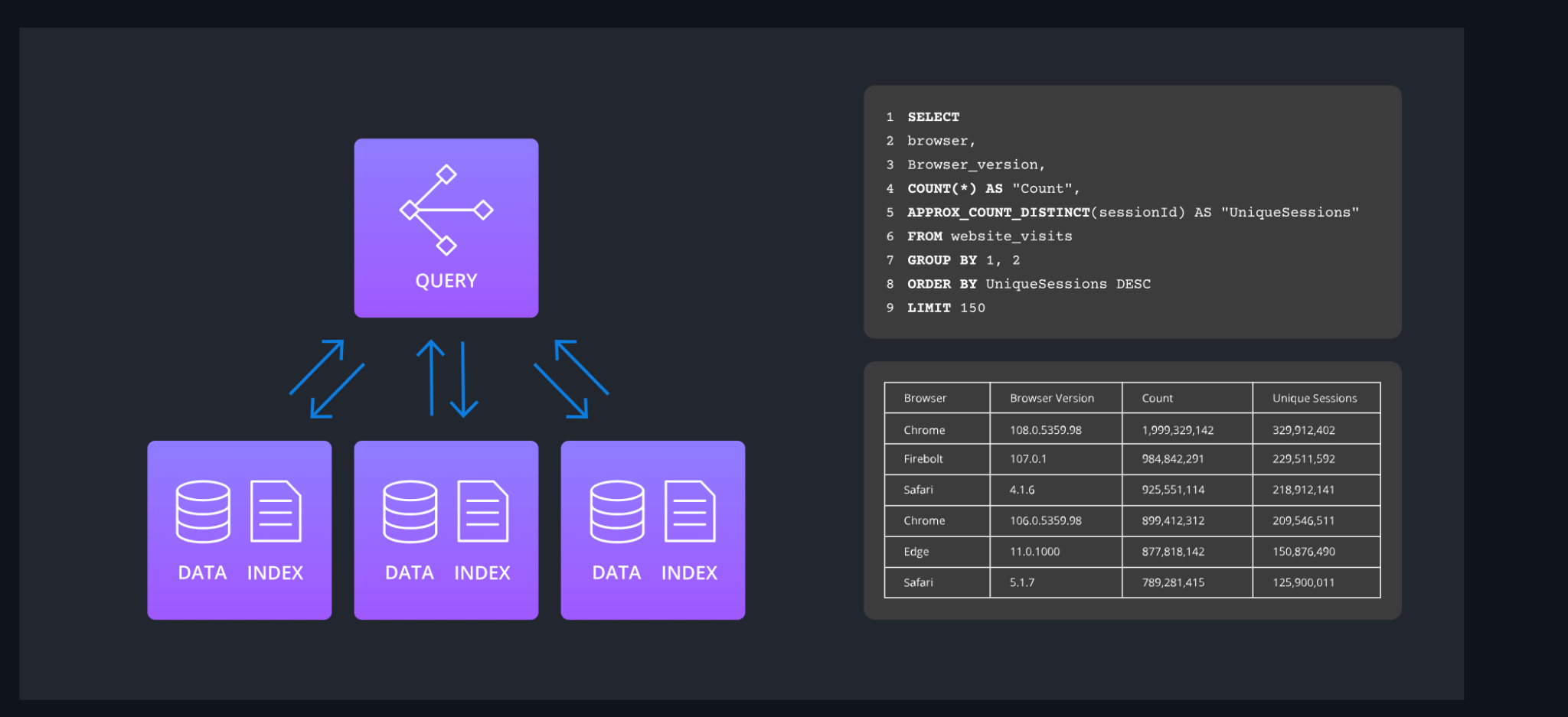

Fast queries at scale and under load

Imply retrieves data in milliseconds regardless of concurrency—whether it’s one user or one hundred, or one query per second or one thousand.

Under the innovative scatter-gather method, Imply splits up queries into discrete parts, routes them to the correct segments to acquire data, and reassembles them for results. This occurs in parallel, ensuring rapid response times on datasets from one gigabyte to one petabyte—and beyond.

In addition, Imply includes the Druid Multi-Stage Query (MSQ) engine, which uses SQL for ingestion and querying, improves the performance and efficiency of batch ingestion, and supports long-running queries and large data transfers. This means that your engineers can use SQL, a language they already know, to work with data—and more easily scale complex analytics over massive datasets.

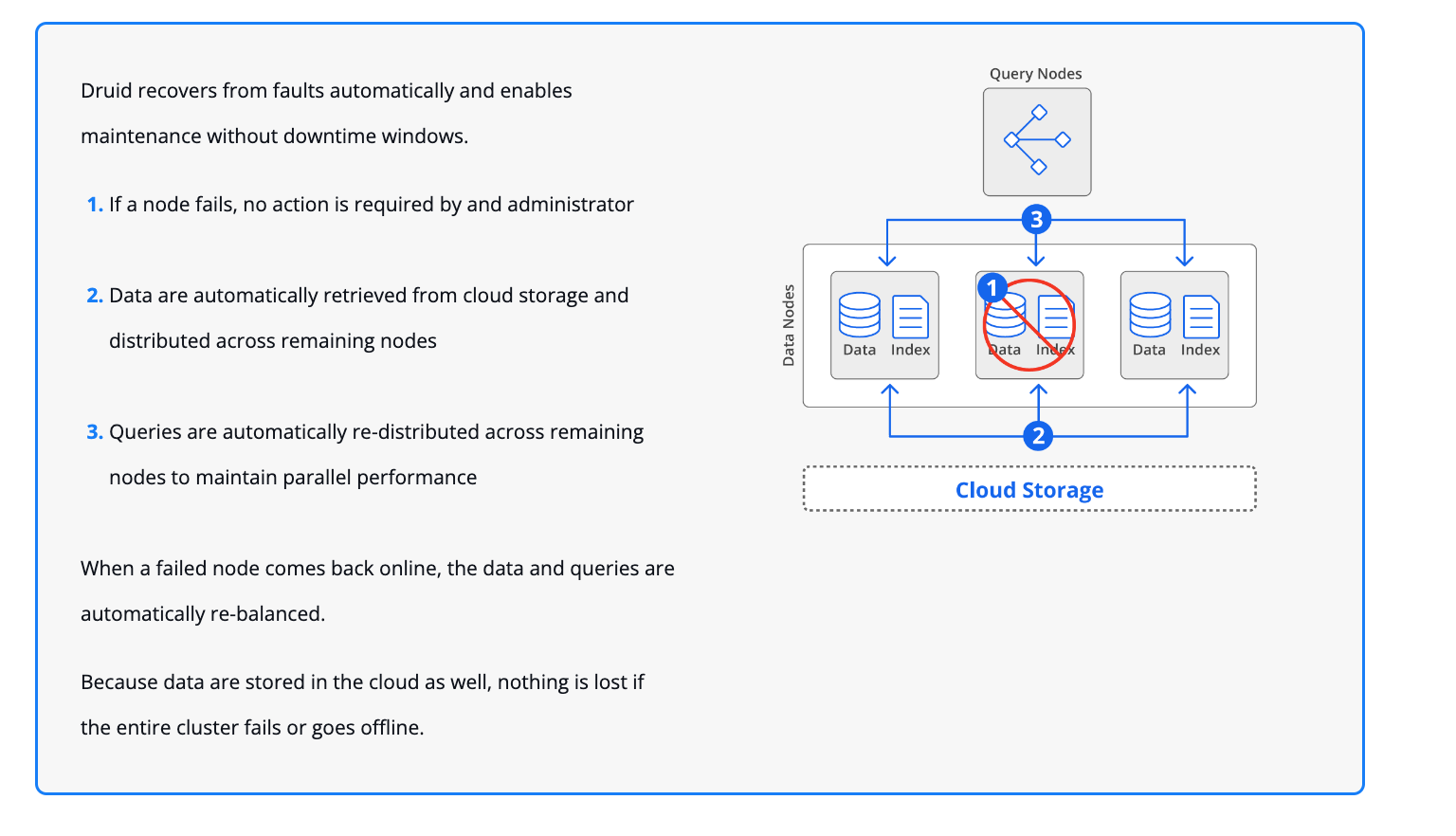

Zero downtime

In many use cases, IoT applications must always be available. Key systems for train control or healthcare monitoring are important for safety, and outages can be dangerous.

From the beginning, Imply was designed to be used continuously without downtime (neither planned nor otherwise). When a cluster loses a node due to human error, server failures, or other reasons, the workload will be spread across surviving nodes and continue to run. If a data node goes down, Imply will automatically pull a copy of the data from deep storage and redistribute it across other nodes.

Lastly, upgrades are implemented on a rolling basis, so that there is no need to shut down the entire database.

Imply’s architecture, built with Apache Druid, ensures reliability & durability

Manufacturing

The manufacturing sector is adopting IoT innovation to enhance production efficiency, ensure quality, and streamline resource management. By modernizing their procedures and facilities through IoT technologies, manufacturers can carve out a competitive advantage and stay ahead in a rapidly evolving landscape.

The need to integrate IoT technologies arises from the industry’s commitment to improving productivity, product quality, and resource utilization. By seamlessly incorporating IoT into operations, businesses aim to establish a balanced synergy between technology and production, positioning themselves within the evolving landscape of industrial innovation.

Explore how IoT integration can contribute to pragmatic advancements in manufacturing processes.

Predictive Maintenance: Perform proactive analysis on sensor data from manufacturing equipment to anticipate machine failures or maintenance needs. Monitor variables like temperature, vibration, and wear for timely interventions, reducing downtime and operational costs.

Production Optimization: Continuously monitor production parameters such as temperature, pressure, and vibration to identify inefficiencies and bottlenecks. Real-time analytics enable immediate adjustments, improving throughput, product quality, and minimizing waste.

Quality Control: Utilize real-time analytics and machine vision technologies for instant defect detection and maintaining consistent product reliability. Analyze data from IoT sensors and visual inspection systems to uphold stringent quality standards.

Inventory Management: Navigate inventory complexities with real-time analytics powered by RFID tags and sensors. Gain precise insights into inventory levels, preventing stockouts, and streamlining the ordering process. Optimize your manufacturing processes with practical and data-driven solutions.

A Fortune 100 multinational manufacturer, specializing in safety, building, and aerospace technologies, has leveraged its smart asset management platform to enhance the safety, security, and productivity of physical assets across various sites. Facing challenges with its previous database’s inability to scale for the massive volume of IoT sensor data—ranging from temperatures to humidity levels, with up to 11,000 KPIs and 7.9 million hourly data points per KPI—the company adopted Imply Manager to deploy Druid clusters on Microsoft Azure. This upgrade significantly improved data reading, updating, deduplication, and increased transaction and query handling capabilities, while also introducing an extension for efficiently sampling large datasets. Now, the platform not only supports real-time monitoring and anomaly alerts but also facilitates predictive maintenance scheduling, thereby optimizing operational efficiency and reducing costs.

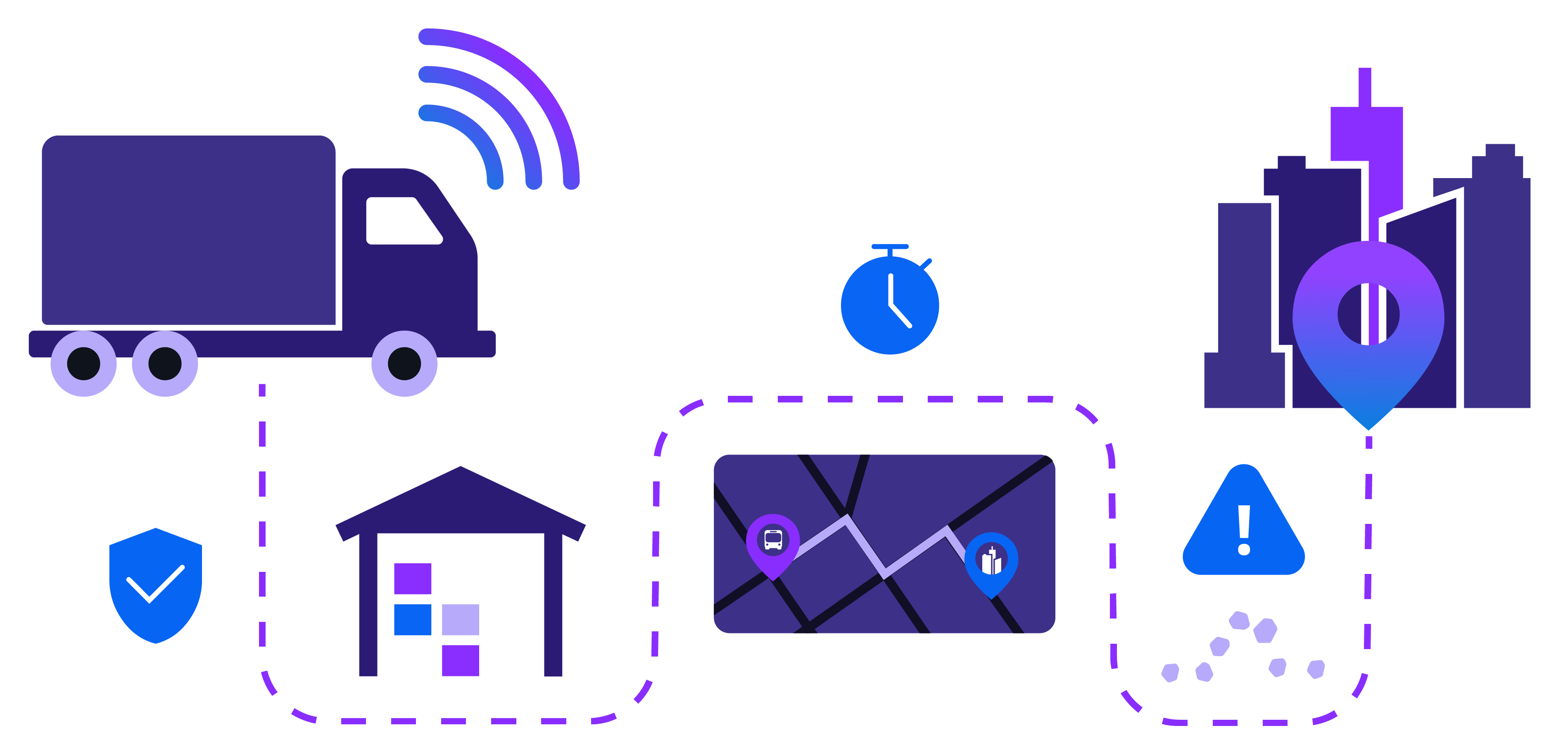

Logistics & Transportation

Efficiency and reliability are vital in the dynamic field of logistics and transportation. In this fast-paced sector, strategic analytics implementation proves transformative, enhancing operational efficiency, customer satisfaction, and overall performance. Utilize data-driven insights to navigate the complexities of supply chain management. This analytical approach enables swift decision-making, efficient resource utilization, and an improved ability to meet customer demands. Integrating analytics becomes a practical cornerstone for achieving operational excellence and staying competitive in the ever-evolving logistics and transportation industry. Stay efficient and reliable in your operations.

Predictive Maintenance: Continuously monitor vehicle health through sensors and telematics to predict maintenance needs, preventing unexpected breakdowns. This proactive approach ensures timely servicing, reduces the risk of disruptions, and enhances overall operational reliability.

Route Optimization: Analyze traffic data and vehicle performance metrics to empower logistics companies in dynamically optimizing delivery routes. This results in faster delivery times, reduced fuel consumption, and minimized vehicle wear and tear, fostering a more efficient delivery process, lowering operational costs, and ensuring enhanced customer satisfaction through timely deliveries.

Real-time Tracking: Track vehicles and goods in real-time for unparalleled visibility into the supply chain. Make informed decisions, improve asset utilization, and enhance customer communication with accurate, up-to-the-minute information on delivery status.

Fleet Management: Analyze driving patterns and performance metrics to optimize fuel consumption and promote safer driving behaviors. Monitor factors like speed, idling time, and braking patterns for targeted feedback and training, resulting in reduced fuel costs, fewer accidents, and lower insurance premiums.

Warehouse Automation: Leverage robotics and sensor-equipped machinery in warehouse operations to significantly improve efficiency and accuracy in inventory management. Automated systems streamline operations, reduce errors, enhance order fulfillment speeds, and free up human resources for more complex tasks, leading to a scalable and flexible logistics infrastructure.

An analytics provider for public transit, serving over 100 cities including Miami, Oakland, and Philadelphia, faced scalability and cost challenges with PostgreSQL while managing data from 25,000 vehicles generating JSON data. The high annual cost of over $200,000, limited scalability, and frequent outages of PostgreSQL hindered the company’s growth and ability to showcase its platform to potential clients. By switching to Druid, the company significantly reduced its operational costs to around $30,000 and enhanced its database architecture’s scalability and reliability. This transition not only supported growth by enabling the service of up to 500 customers but also improved internal stakeholders’ access to insights and prepared the company for future enhancements, including moving from batch to real-time data streaming with Amazon Kinesis.

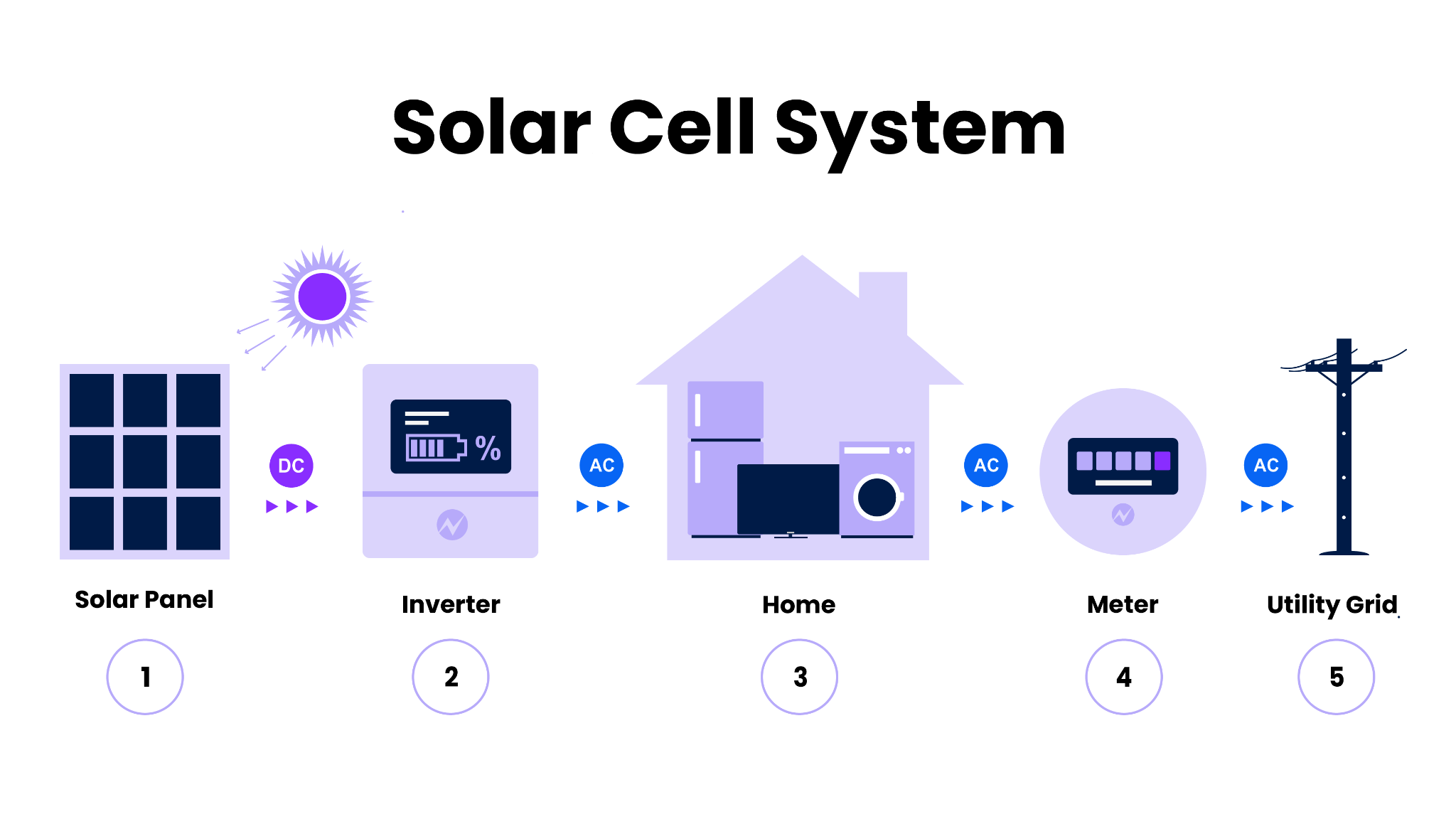

Energy

Explore the transformative journey of the energy sector towards sustainability, where the integration of Internet of Things (IoT) analytics takes center stage. This powerful combination not only serves as a cornerstone for innovation but also enhances operational efficiency. By leveraging advanced analytics, the energy industry tackles present challenges while proactively preparing for future demands. Through real-time data insights and predictive capabilities, IoT analytics empower decision-makers to optimize resource utilization, reduce environmental impact, and ensure a resilient energy infrastructure. Embrace the intersection of technology and sustainability as the energy sector pioneers a greener, more efficient future.

Smart Grid Management: Leverage analytics to analyze energy consumption patterns and weather data for optimized electricity production, efficient power distribution, and a stable energy supply.

Renewable Energy Integration: Analytics ensures a seamless balance of variable energy outputs from renewables, facilitating a smooth transition to sustainable energy without compromising reliability.

Demand Forecasting: Explore how analytics, fueled by historical data and weather forecasts, enables accurate predictions of future energy demand. This leads to informed pricing strategies and optimized operations.

Asset Management: Continuous monitoring of energy infrastructure through analytics extends asset lifespan, ensures uninterrupted service, and avoids costly repairs or replacements.

Distributed Energy Management: Discover how analytics optimizes energy sharing in microgrids, allowing stakeholders to make informed decisions on resource allocation, improving microgrid efficiency, and fostering energy independence within communities.

A leading utility management company that offers an AI-enabled SaaS platform for power plants, retailers, utilities, and grid operators transitioned its data architecture to improve service for over 45 million global customers. Initially reliant on a complex process involving Amazon S3, Athena tables, Apache Spark, and MongoDB for data analysis and visualization, the company struggled with scalability and latency as its data intake surged from 40 to 70 million smart meters. By switching to Druid, removing Apache Spark, and embracing real-time data ingestion, the company not only streamlined operations but also enhanced its ability to offer precise energy demand predictions and interactive, customizable analytics through a Pivot-based interface. This strategic overhaul significantly reduced operational costs by $4 million, increased forecast accuracy by 40%, and boosted customer lifetime value by $3,000, demonstrating the transformative impact of optimized data architecture on utility management and customer service.

Telecom

Analytics stands as a cornerstone in the evolution of the telecommunications sector, influencing operations, customer interactions, and network reliability. Its impact is substantial but grounded in practical applications. Delving into crucial use cases, analytics optimizes network performance by scrutinizing data, leading to operational efficiency. It plays a pivotal role in preemptive issue resolution, ensuring a robust and reliable network infrastructure.

Additionally, analytics contributes significantly to fraud prevention by monitoring real-time patterns and mitigating financial risks. In essence, this unassuming yet powerful tool is quietly revolutionizing telecommunications, bringing tangible improvements to operational processes, customer satisfaction, and overall industry resilience.

Network Optimization: In an era of heightened connectivity expectations, telecom operators analyze network traffic data to fine-tune networks. This enhances call quality, improves data speeds, and future-proofs against growing bandwidth demands, ultimately boosting customer satisfaction.

Fraud Detection: Analytics helps telecom companies mitigate financial risks by monitoring real-time phone call patterns and data usage. This proactive approach allows operators to detect anomalies indicative of fraudulent behavior, ensuring both revenue protection and customer trust.

Customer Experience Optimization: Understanding customer behavior is paramount in the competitive telecom sector. Analytics facilitates customer segmentation, anticipates needs, and tailors communications, leading to an improved customer experience, higher engagement, and revenue growth.

Predictive Maintenance: Ensuring reliable network equipment is vital for uninterrupted service delivery. Predictive analytics helps monitor infrastructure health, predicting potential failures and allowing for proactive maintenance. This minimizes network downtime and maintains high service quality standards.

Spectrum Management: With the challenges of 5G and a surge in connected devices, efficient spectrum use is critical. Analytics plays a pivotal role in spectrum management, allowing operators to analyze usage patterns and optimize resource allocation. This ensures enhanced network capacity, improved coverage, and the delivery of faster, more reliable services.

A global leader in telecommunications, facing the need to manage vast amounts of data from its network operations, turned to advanced analytics solutions to enhance its operational efficiency and customer service. The adoption of a scalable analytics platform enabled the company to handle real-time data analysis, leading to improved network performance, reduced operational costs, and a superior customer experience. This strategic move not only bolstered the company’s competitive edge but also paved the way for innovations in service delivery and customer engagement.

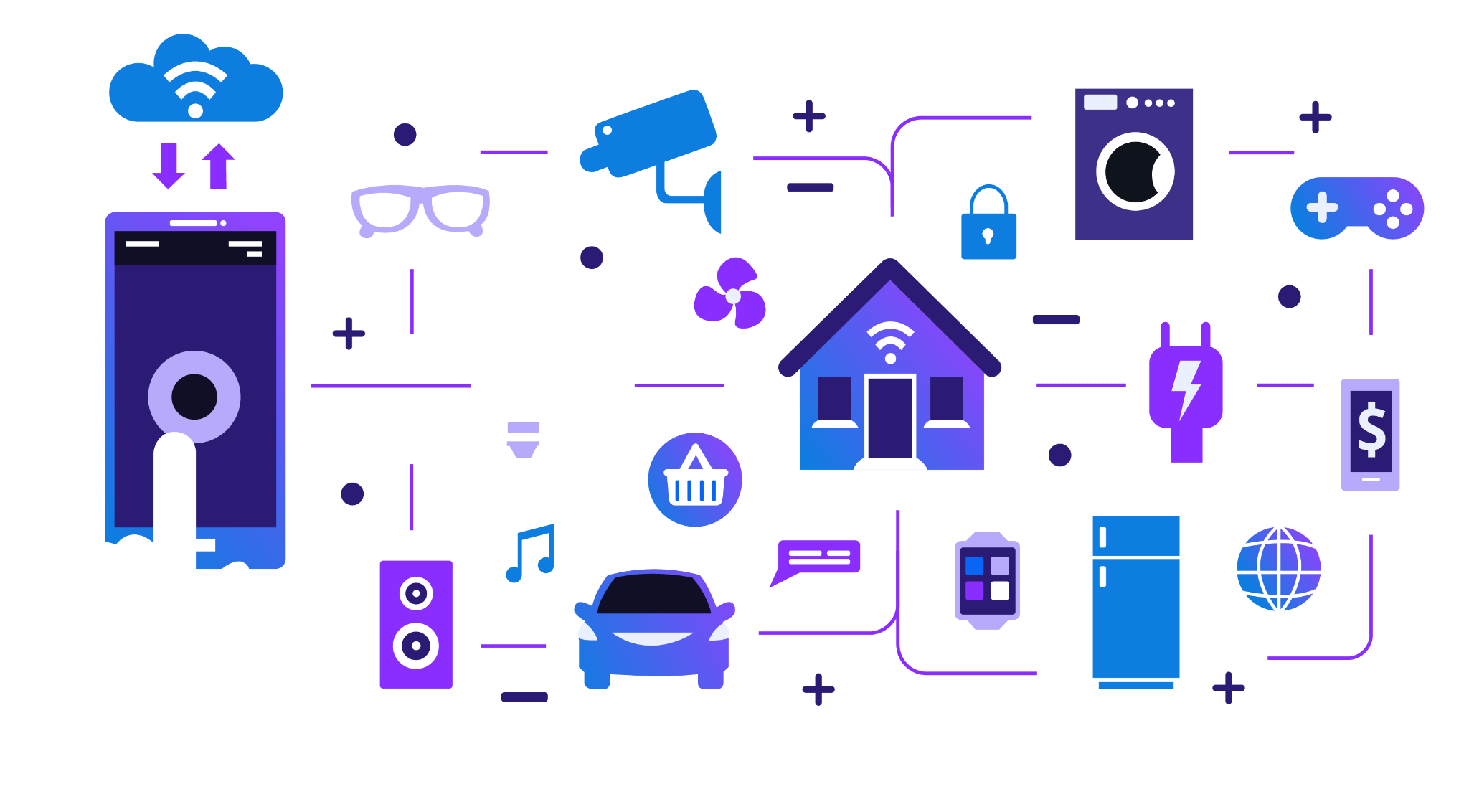

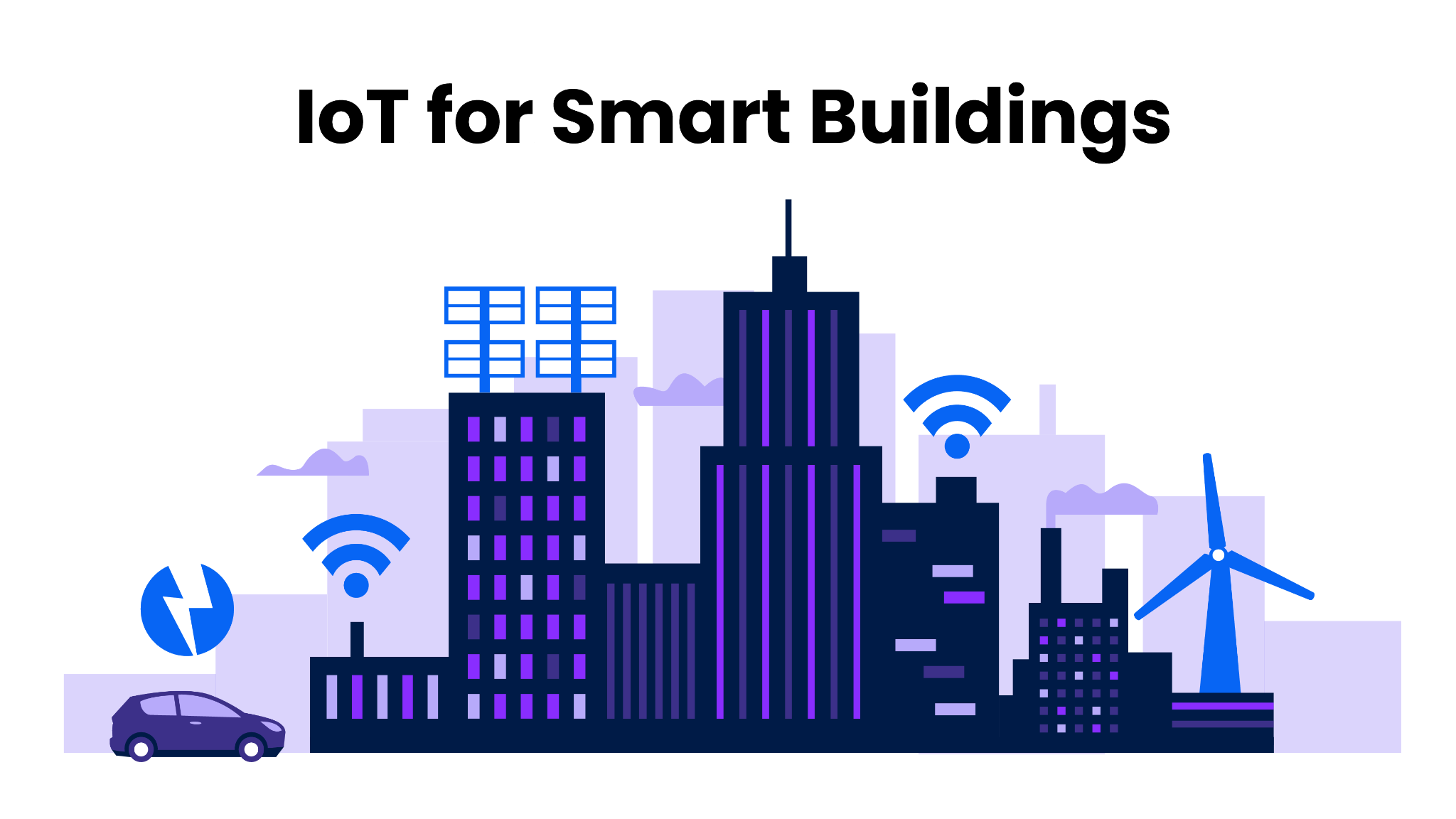

Smart Buildings

Smart building management seamlessly integrates comfort, efficiency, and sustainability. Within this framework, energy management systems harness the power of IoT data to significantly curtail consumption without compromising occupant comfort. This synergy is pivotal, optimizing various aspects of building management through analytics.

Energy management becomes a cornerstone, identifying inefficiencies and enabling real-time adjustments to reduce waste, aligning with broader sustainability objectives. Occupancy management harnesses analytics to understand and predict patterns, allowing for dynamic adjustments that conserve energy and enhance occupant comfort. In predictive maintenance, analytics ensures the longevity of building systems by anticipating potential failures, minimizing disruptions, and optimizing operations. This multifaceted approach underscores the profound role analytics plays in advancing smart building management.

Energy Management: Relies on analytics to optimize consumption, identify inefficiencies, make real-time adjustments, and significantly reduce energy waste for cost savings and environmental sustainability.

Occupancy Management: Utilizes analytics for real-time adjustments based on occupancy data, fostering energy conservation, and enhancing occupant comfort.

Predictive Maintenance: Employs continuous monitoring and analytics to anticipate potential equipment failures, minimize breakdown risks, and extend the lifespan of building systems.

Indoor Air Quality Monitoring: Ensures effective management of air quality for occupant well-being.

Security and Access Control: Identifies unusual activities, efficiently manages access, and ensures overall safety.

Thing-it, a German-based leader in smart office building management software, faced scalability and performance challenges with MySQL as their data architecture backbone.

Initially, Thing-it utilized a batch data ingestion process through Amazon SNS and Lambda, culminating in MySQL for storage and querying, which hindered the speed and consistency of data aggregations critical for their interactive dashboards.

To address these limitations and support their rapid customer expansion, Thing-it transitioned to a streaming data architecture using Amazon Kinesis and Druid, significantly enhancing their data handling capabilities to 2 billion rows per month.

This shift not only halved their database expenses but also dramatically improved query performance, with the slowest queries being executed in 300 milliseconds and a near-100% success rate.

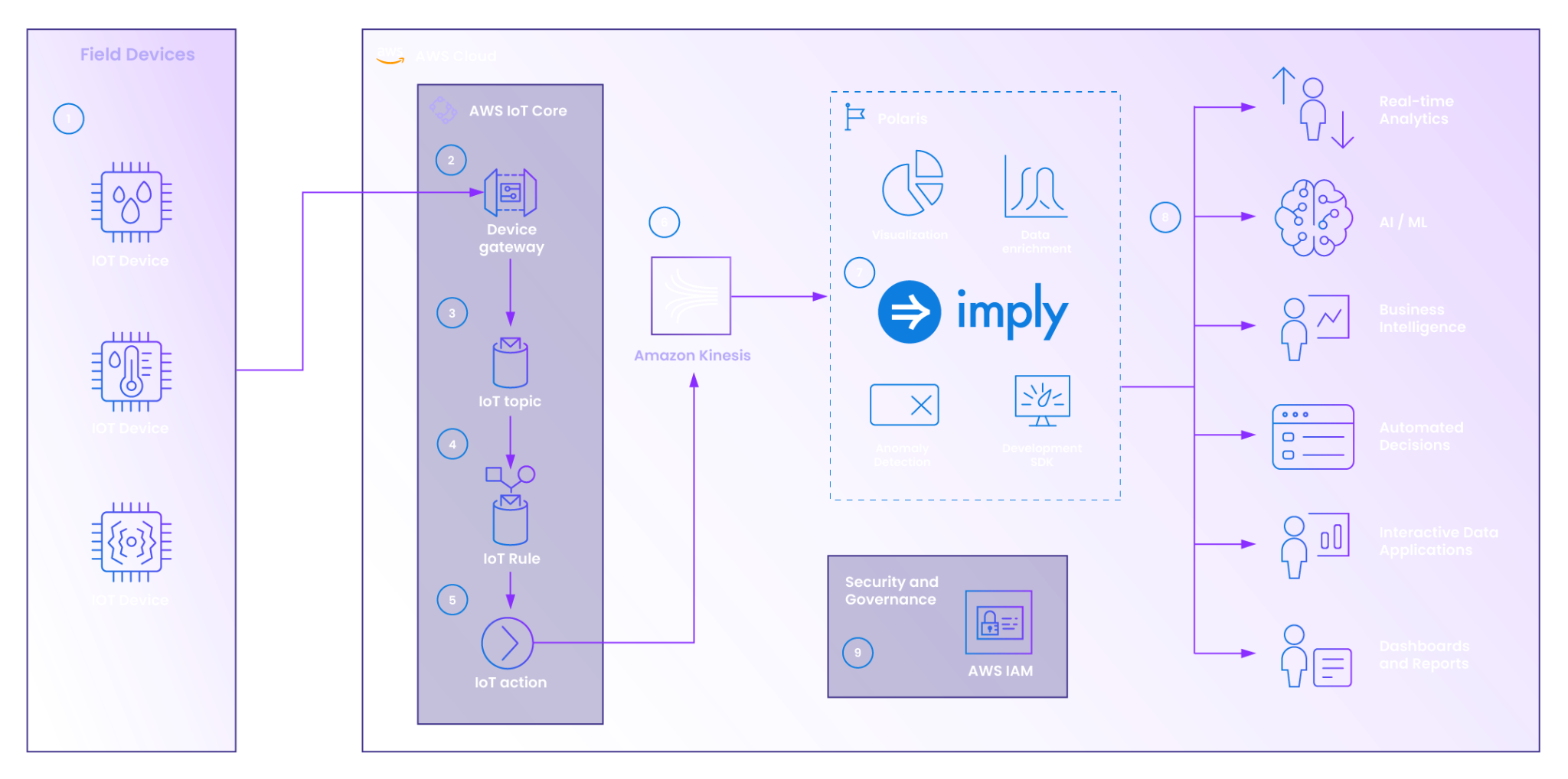

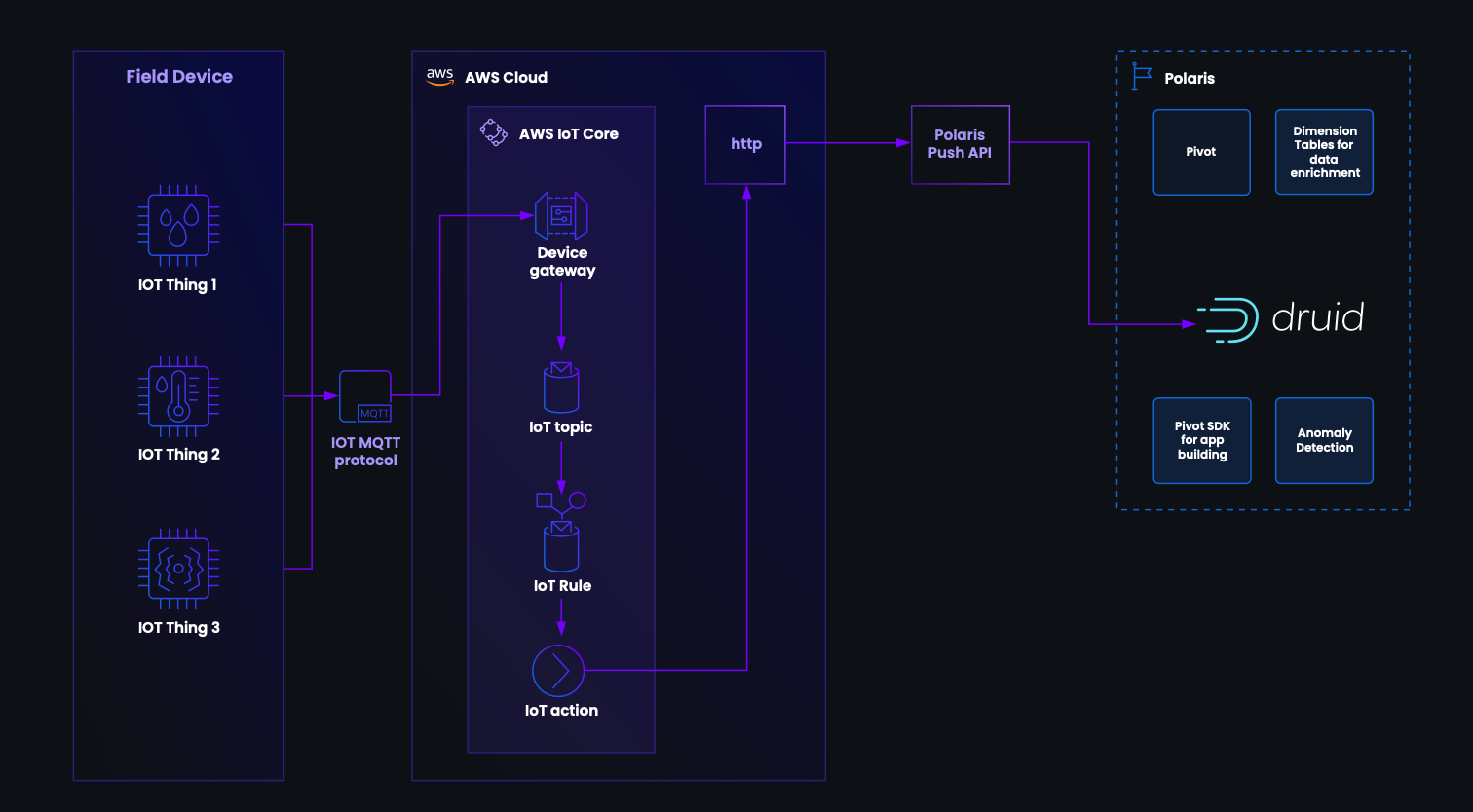

Leverage AWS IoT Core and Imply to quickly collect device metrics and build an end to end solution

Quickly integrate with AWS IoT Core

Whether it’s manufacturing, energy, telecoms, connected vehicles, life sciences, or logistics, AWS IoT Core and Imply are a winning combination for any IoT use case. Get the best of all worlds—AWS IoT provides a proven solution for the complexity and volume of IoT, while the Imply product family, built on the open source Apache Druid database, is used and trusted by IoT organizations of all sizes and industries. Meet any additional needs with a huge range of AWS services, including Amazon Kinesis and AWS Managed Streaming for Kafka for streaming data ingestion, AWS IoT Greengrass for edge device management, or AWS IoT Device Defender for fleet security.

Identify current patterns and trends, predict future growth, model simulations with digital twins, manage your existing IoT infrastructure—and more. Read this blog article on monitoring your data in real time with AWS IoT Core and Imply. To get started, sign up for a free trial of AWS IoT Core and Imply Polaris, the fully managed, Druid database-as-a-service.

Grow without breaking your budget

Build smartly and grow economically. Take advantage of lightweight features, like AWS IoT Core’s MQTT broker, which intelligently directs device messages without adding operational overhead. Imply uses the unique Druid architecture to scale exponentially and query data rapidly—while costs only increase linearly.

Work flexibly with IoT data

Need rapid insights and detail in data? Whether you’re an executive or an engineer, AWS IoT and Imply empower you and your team to investigate data—and make decisions—in real time. Use Imply to get fast aggregations and rollups without sacrificing the granularity of your IoT data. Or use Imply GUI to explore data through interactive, shareable visualizations, such as stack charts, starbursts, maps, and more. Developers can use both AWS Iot and Imply with SQL queries, leveraging skills they already have. Learn how intuitive Imply Pivot can turn your data into easy-to-understand graphics.

Abstract away operational concerns

AWS IoT Core and Imply Polaris are fully managed, so that you and your teams don’t have to worry about running, configuring, provisioning, or troubleshooting your infrastructure. That way, you can spend more time building new features (and improving existing ones) within your IoT environment.

Plus, pay only for what you use—no more opaque billing contracts or high upfront costs. Charges are straightforward and clear, based on device connections, messages processed, and other IoT-related consumption metrics.

Examples of customers running AWS and Imply for an end to end IoT solution

Analyzing the cutting edge of electric vehicles

Rivian is a groundbreaking manufacturer of electric vehicles, producing EVs for rugged adventures, daily commutes, and deliveries. Rivian required real-time analytics for a number of use cases, including determining potential fixes for over-the-air (OTA) updates, analyzing the performance of test vehicles, and managing a fleet of Amazon delivery vehicles.

Their previous database struggled with retrieving queries on small datasets, leading to latency of up to 20 seconds, slowing down downstream applications such as dashboards. By switching to Imply, Rivian was able to scale their datasets to trillions of rows, achieve subsecond query latencies, and even remotely diagnose issues. Going forward, Rivian plans to expand their use of Imply to even more vehicles and use cases, especially as production grows.

Highlights

- 500 millisecond response time

- 98 percent reduction in query times

- Trillions of rows

Visualizing Pickups and Dropoffs for Logistics

This company provides a unified supply chain platform for its customers, enabling them to visualize collections, deliveries, and progress in real time. As a result, client organizations can redirect vehicles based on current traffic and road conditions, flag missed events for follow up, better optimize route efficiency, and improve customer satisfaction.

By selecting fully managed Imply and AWS services as the foundation for their solution, this company was able to more quickly build new features and improve existing ones. Their engineers were able to abstract away rote tasks like scaling or ensuring resilience. In addition, they utilized Imply products like Clarity for monitoring Druid performance and Cluster Manager for deploying Druid clusters and ensuring high availability.

Highlights

- 50K data points rendered within five seconds or less

- Replaced Hadoop and enabled real-time operational visibility

- Provides intuitive filters for rapid aggregations and interactive maps

Smart EV Charging for Next-Gen Vehicles

Based in Europe, this organization manufactures and manages crucial infrastructure for electric vehicles (EVs). To date, they have sold almost 400,000 stations across 120 nations—both fast chargers in public hubs as well as smaller devices for home use.

These cutting-edge fast chargers are maintained by field support teams, which need to quickly run analytics on massive volumes of telemetry data in order to pinpoint and fix any issues that arise. As a result, this organization built a capable, real-time application to monitor charger performance, powered by two fully managed services: Confluent Cloud for data streaming and Imply Polaris, the Druid database-as-a-service.

Highlights

- A decrease in query times—from 4 hours to under one second

- A 100% reduction in query latency

- Enabled proactive troubleshooting and improved response times

“At the moment, we are ingesting the real-time data from fast-charging chargers into Imply, because they have more priority…that’s less than 10% of the chargers that we have. In the future, we will start sending more charger data to Imply. So the amount of data we’re consuming will significantly increase.”

— A data engineer at this organization

Data Analytics for Smart Drink Machines

This multinational corporation is a leader in the food and beverage sector, with a huge portfolio of best-selling brands known and trusted by households across the globe. Their latest product is an automatic beverage maker, which can create teas, coffees, and other hot drinks to enjoy from the comfort of your own home.

As this organization expands to different markets, they need to gather data on and analyze key metrics, such as successful/unsuccessful pairings, types of beverages brewed, machine types and drink preferences, and more.

To facilitate these efforts, their teams decided to build an application from the ground up, one that incorporates fully managed products like Confluent Cloud for streaming data, Imply Polaris for rapid, real-time analytics on data at scale, and Imply Pivot to build shareable, interactive visualizations for fast drilldowns and aggregations.

Learn More

Learn more on how to monitor your data with AWS IoT Core and Imply, check out our success center, or get started in minutes with a free trial of AWS IoT Core and Imply Polaris.

Revolutionizing Energy Analytics: How Innowatts Saved Millions and Improved Insights with Imply

Innowatts provides an AI-enabled SaaS platform for major utilities nationwide. Initially, they faced challenges with scaling their data infrastructure as smart meter data intake grew from 40 million to 70 million. Due to slow queries and an inability to provide granular, meter-level data, Innowatts decided to transition to Apache Druid with Imply.

By adopting Imply Enterprise Hybrid, a complete real-time database built from Apache Druid, Innowatts simplified its architecture by removing Apache Spark, enabled streaming data for more precise forecasting, and future-proofed their infrastructure by ensuring compatibility with services like Apache Kafka. The transition reduced employee workload, eliminated rote tasks, and allowed automatic detection of new columns, streamlining the onboarding process for utility customers.

As a result, Innowatts provided end users with interactive dashboards and the ability to rapidly, flexible aggregate data (powered by Imply Pivot). This improved user experience also enabled utility analysts to more easily compare recent and historical data, more accurately determine consumption patterns, and better forecast (and adapt to) future demand.

$4 million cost reduction

40% improvement in forecast accuracy

$3,000 increase in customer lifetime value

Innowatts: Analyzing electric meters using Druid

How Innowatts simplifies utility management with Apache Druid

Enhancing Supply Chain Efficiency: PepsiCo's Predictive Analytics Journey

PepsiCo utilizes predictive analytics and machine learning to address supply chain challenges, particularly predicting and preventing out-of-stock situations. During the COVID-19 pandemic, the company experienced increased demand for certain products, prompting them to turn to analytics to forecast and manage stock levels.

They implemented a Sales Intelligence Platform, powered by Apache Druid, which integrates retailer and PepsiCo’s supply chain data to predict when items will go out of stock, allowing sales teams to take proactive measures.

Driving Success: How Rivian Re-Imagined its Data Infrastructure

As a pioneering builder of electric smart vehicles, Rivian utilizes Apache Druid and Imply to analyze and aggregate the vast amounts of data generated by its EVs.

Rivian faced unique challenges: because its vehicles are designed to be operated in remote settings without connectivity for extended periods of time, data arrives unpredictably and sometimes, all at once. In addition, Rivian also had to analyze data in order to diagnose issues and deliver solutions via over-the-air (OTA) software updates—thus saving customers a trip to service centers.

By adopting Imply Enterprise Hybrid, a complete real-time database built from Apache Druid, query performance improved significantly, reducing speeds from 15-20 seconds to 500-700 milliseconds, particularly important for dashboards and user experience. In addition, Rivian uses the Imply Pivot GUI for building and sharing interactive visualizations for both internal and customer use.

Rivian efficiently manages and analyzes data from various use cases, including its delivery van fleet, running their Imply Hybrid clusters on Amazon EKS. Future plans include exploring time series analytics and expanding Imply usage as data volumes grow, ensuring Rivian’s competitiveness in the electric vehicle market.

Provide a smoother user experience with faster data retrieval, particularly crucial for dashboards and real-time monitoring.

Increase productivity and efficiency for engineers and analysts by reducing waiting times for data queries.

Enable more complex and resource-intensive analyses, leading to deeper insights into vehicle performance, customer behavior, and operational efficiency.

Real-time telemetry at IoT scale

Physical Hardware, Digital Analytics: IoT Challenges, Best Practices, and Solutions

Empowering Operations: How Thing-it Facilitates Success with Imply

Thing-it, a German company, uses Apache Druid and Imply for its smart asset management platform, streamlining facility operations. The switch to Druid has organized and processed data efficiently, reducing developer workload and speeding up query responses. This scalable, cost-effective solution aligns with Thing-it’s growth trajectory.

According to Software Architect Robert Sauer, “Thing-it needed a data system that offers enough headroom for expected growth and simplifies management, being more cost-effective.” Importantly, Thing-it wanted a database to reduce manual work for developers, eliminating the need to reindex or migrate data.

Imply Enterprise Hybrid, a complete real-time database built from Apache Druid, met those requirements, providing data isolation for customers, technical support, and schema flexibility, eliminating the need for developers to fine-tune indexes or create individual schemas. With Imply, Thing-it built more visualizations, analytics, and useful capabilities for their platform—and added real-time features. Users can retrieve reports on metrics like usage or foot traffic and accomplish time-sensitive tasks like finding available desks or locating experts within the office.

Freed up developers, allowing them to focus on more strategic and high-value tasks.

Accelerated query responses, thereby enhancing the overall user experience, enabling faster decision-making, and improving operational efficiency.

Scalable and cost-effective database delivers the flexibility and agility needed to effectively support evolving business needs and customer demands.

Physical Hardware, Digital Analytics: IoT Challenges, Best Practices, and Solutions

Maximizing Transit Efficiency: How Swiftly stays on time with Apache Druid

Swiftly, a transit data platform serving 145+ agencies in eight countries, facilitates over two billion rider trips yearly. It optimizes service through scheduling, planning, and operational insights.

It ingests real-time location data from 100,000+ buses, trains, and ferries, providing real-time predictions via public apps. Historical data storage supports planners in translating insights into improved service swiftly.

Users utilize a dashboard to visualize data, with Apache Druid powering backend queries, offering highly interactive capabilities. Swiftly’s API issues queries to Druid, syncing data for flexible exploration and visualization.

Adam Cath, Swiftly’s Director of Product Management, emphasizes flexibility for timely exploration, a significant business advantage. Previously using PostgreSQL, Swiftly now benefits from Druid’s high-performance queries, offering a more responsive customer experience.

Swiftly’s platform flexibility leads to up to 40% increased on-time performance and up to 90% faster data analysis.

Real-time Data Ingestion from over 100,000 buses, trains, and ferries swiftly and efficiently, facilitating the generation of real-time predictions for riders.

Fast Query Performance enables Swiftly to deliver highly interactive dashboards and customer-facing visualizations.

Flexible Data Exploration enables users to explore data efficiently, leading to faster data analysis, and contributing to increased on-time performance and improved service.

Druid Summit 2023 Keynote: Real-Time Analytics in the Real World