CONSIDERATION 1:

Analytics is an afterthought

PostgreSQL was designed for transactions. It’s difficult to get even minimum analytics performance without workarounds or extensions.

PostgreSQL

Analytics applications run best on columnar databases. Columnar databases are imminently compressible, and thus more efficient. Plus, operations such as aggregating, sorting, and filtering are not suited to row-oriented databases. PostgreSQL was designed for transactions and thus uses row stores. There are some vendors that provide column store extensions for PostgreSQL. But if you are going to set up a separate column store anyway, you should consider a database built for analytics that addresses all the challenges listed below.

Druid

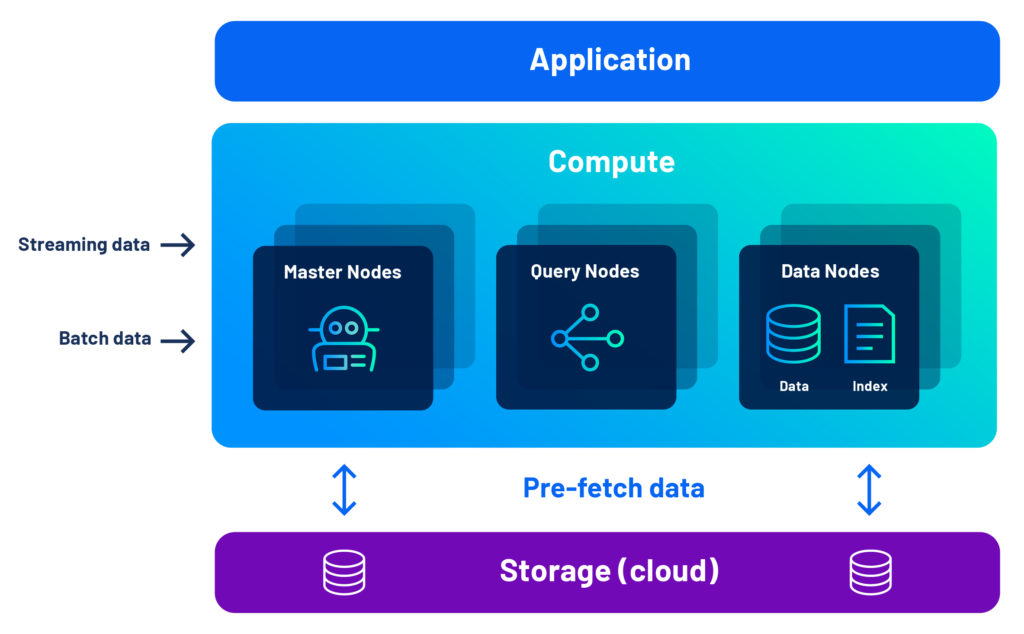

Druid was designed to serve modern analytics applications. Not only does it use a columnar store, it is built with a unique architecture that combines the best of both shared nothing performance and the flexibility of separate storage and compute. Druid can ingest data in batch mode–from your PostgreSQL databases, for example–or from real-time sources such as Kafka streams. Druid also takes the burden off your already overworked database administration team by automatically indexing and backing up your data.

CONSIDERATION 2:

Long query run times

Like any database, PostgreSQL is acceptable at small scales. For larger data volumes, sub-second analytics comes by design.

PostgreSQL

The biggest issue with query times for PostgreSQL is that processing is single-threaded by default. Recently, PostgreSQL added the capability to have queries processed by more than one CPU. However, there are still problems. First, this is still a scale-up solution only (more about that below). Second, this feature is best for high cardinality queries, not the aggregation, sorting, and filtering that are the “bread and butter” of all analytics applications. Finally, further optimization by caching and tiering is left to the administrator to control.

Druid

Druid implements a unique architecture for performance and cost saving measures like data tiering. Crucially, however, Druid pre-fetches all data to the compute layer, which means that nearly every query will be sub-second, even as data volumes grow since queries never are waiting on a caching algorithm to catch up. With a very efficient storage design that integrates automatic indexing (including inverted indexes to reduce scans) with highly compressed, columnar data, this architecture provides better query performance and price-performance.

CONSIDERATION 3:

Not built for real-time data

PostgreSQL can connect to streaming data sources. The problems begin with how it handles it from there.

PostgreSQL

Through the use of 3rd-party connectors and processing applications, PostgreSQL can ingest streaming data in batches. This cannot be considered as even near real-time. Queries must wait for data to be batch-loaded and persisted in storage, and administrators encounter further delays and complexity as they implement even more 3rd-party solutions to ensure events are loaded exactly once (no duplicates), a difficult proposition when thousands or even millions of events are generated each second.

Druid

With native support for both Kafka and Kinesis, you do not need a connector to install and maintain in order to ingest real-time data. Druid can query streaming data the moment it arrives at the cluster, even millions of events per second. There’s no need to wait as it makes its way to storage. Further, because Druid ingests streaming data in an event-by-event manner, it automatically ensures exactly-once ingestion. Learn more here.

CONSIDERATION 4:

Difficult to scale-out

Scale-out is essential for an important, rapidly-growing application. PostgreSQL simply can’t do it without 3rd-party help and extensive engineering.

PostgreSQL

As mentioned above, PostgreSQL has some limited scale up capability to handle some difficult, high cardinality queries. But scaling up is not an effective way to handle many concurrent users. For this, you need a database that scales out by adding nodes to a cluster. This is impossible for PostgreSQL natively, although there are 3rd-party solutions that provide some basic capabilities if your staff is willing to put in the engineering time to shard the data. This could quickly become an overwhelming burden as you add concurrent users.

Druid

Druid’s unique architecture handles high concurrency with ease, and it is not unusual for systems to support hundreds and even thousands of concurrent users. With Druid, scaling out is always built-in, not dependent on heroic data engineering effort, and not limited in how far you can grow. With 3 major node types, Druid administrators have fine-grained control and cost-saving tiering to put less important or older data on cheaper systems. Further, there is no limit to how many nodes you can have, with some Druid applications using thousands of nodes. Learn more about Druid’s scalability and non-stop reliability.

Hear From a Customer

Learn why Atlassian switched from PostgreSQL to Druid for their analytics apps.

Druid’s Architecture Advantage

With Druid, you get the performance advantage of a shared-nothing cluster, combined with the flexibility of separate compute and storage, thanks to our unique combination of pre-fetch, data segments, and multi-level indexing.

Developers love Druid because it gives their analytics applications the interactivity, concurrency, and resilience they need.

In a world full of databases, learn how Apache Druid makes real-time analytics apps a reality. Read our latest whitepaper on Druid Architecture & Concepts

Leading companies leveraging Apache Druid and Imply

“By using Apache Druid and Imply, we can ingest multiple events straight from Kafka and our data lake, ensuring advertisers have the information they need for successful campaigns in real-time.”

Cisco ThousandEyes

“To build our industry-leading solutions, we leverage the most advanced technologies, including Imply and Druid, which provides an interactive, highly scalable, and real-time analytics engine, helping us create differentiated offerings.”

GameAnalytics

“We wanted to build a customer-facing analytics application that combined the performance of pre-computed queries with the ability to issue arbitrary ad-hoc queries without restrictions. We selected Imply and Druid as the engine for our analytics application, as they are built from the ground up for interactive analytics at scale.”

Sift

“Imply and Druid offer a unique set of benefits to Sift as the analytics engine behind Watchtower, our automated monitoring tool. Imply provides us with real-time data ingestion, the ability to aggregate data by a variety of dimensions from thousands of servers, and the capacity to query across a moving time window with on-demand analysis and visualization.”

Strivr

“We chose Imply and Druid as our analytics database due to its scalable and cost-effective analytics capabilities, as well as its flexibility to analyze data across multiple dimensions. It is key to powering the analytics engine behind our interactive, customer-facing dashboards surfacing insights derived over telemetry data from immersive experiences.”

Plaid

“Four things are crucial for observability analytics; interactive queries, scale, real-time ingest, and price/performance. That is why we chose Imply and Druid.”

© 2022 Imply. All rights reserved. Imply and the Imply logo, are trademarks of Imply Data, Inc. in the U.S. and/or other countries. Apache Druid, Druid and the Druid logo are either registered trademarks or trademarks of the Apache Software Foundation in the USA and/or other countries. All other marks and logos are the property of their respective owners.