Founded in 2011, GameAnalytics was the first purpose-built analytics provider for the gaming industry, designed to be compatible with all major game engines and operating systems. Today, it now collects data and provides insights from over 100,000 games played by 1.75 billion people, totaling 24 billion sessions (on average) every month. Each day, GameAnalytics ingests and processes data from 200 million users.

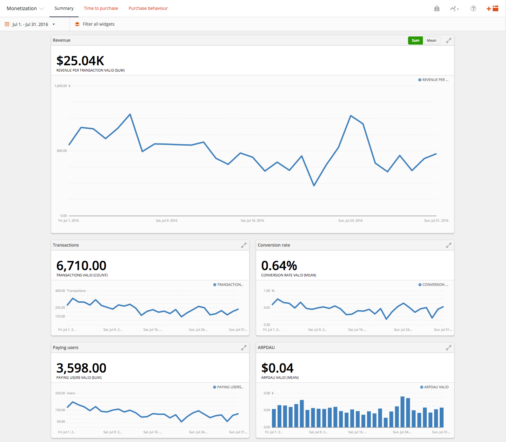

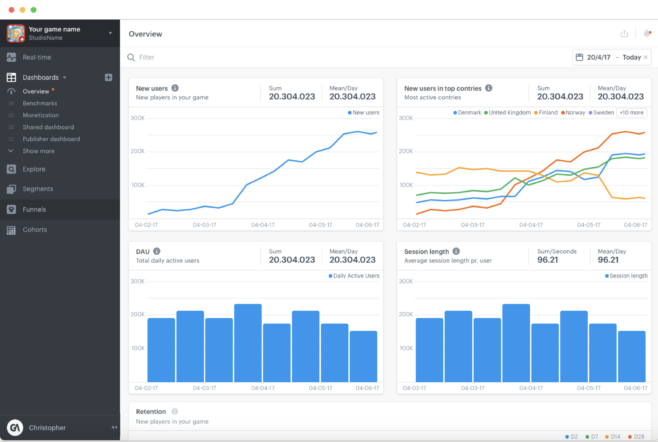

Two examples of GameAnalytics dashboards

Prior to switching to Druid, GameAnalytics (GA) utilized a wide variety of products in their data architecture. Data, in the form of JSON events, was delivered to and stored in Amazon S3 buckets before being enriched and annotated for easier processing. For analytics, the GA team utilized an in-house system built on Erlang and OTP for real-time queries, while relying on Amazon DynamoDB for historical queries. Lastly, they created a framework (similar to MapReduce) for computing real-time queries on hot data, or any events generated over the last 24 hours, while also creating and storing pre-computed results in DynamoDB.

While this solution was very effective at first, stability, reliability, and performance challenges arose as GA scaled. Although key/value stores such as DynamoDB are very good at fast inserts and fast retrievals, they may encounter issues with ad-hoc analysis—especially in situations where data complexity is high.

In addition, pre-computing results for faster queries wasn’t necessarily an adequate solution. After all, more attributes and dimensions led to larger query set sizes, and past a certain point, it was impossible to pre-compute and store every possible combination of queries and results. One potential solution would be to restrict users to filter on only a single attribute/dimension in their queries—but this would remove the customer’s ability to execute ad-hoc analysis on their data.

And ad-hoc analysis is crucial to game design, especially where it comes to iterating and improving games based on audience feedback. Without the ability to explore their data flexibly, teams can miss out on insights, forfeiting opportunities to optimize gameplay, improve the player experience, and in the worst-case scenario, even lose players to more responsive competitors.

Druid helped resolve many of these problems. Because it was built to power versatile, open-ended data exploration, Druid did not require pre-computing or pre-processing for faster query results. Instead, Druid’s unique design, which could act on encoded compressed data, avoid the need to move data from disk to memory to CPU, and support multi-dimensional filtering, enabled rapid data retrieval—and powered the interactive dashboards so important to GA customers.

In addition, Druid’s separation of ingestion and queries into separate nodes, rather than lumping various functions together into a single server, also provided extra flexibility in scaling and improved resilience. “It allows more fine-grained sizing of the cluster,” backend lead Andras Horvath explains, while the “deep store is kept safe and reprocessing data is relatively easy, without disturbing the rest of the DB.”

Druid also provided ancillary benefits as well. First, it was a single database that unified real-time and historical data analysis, helping GA avoid a siloed data infrastructure. Further, Druid also was compatible with a wide range of GA’s preferred AWS tools, including S3, Kinesis, and EMR. Lastly, Druid’s native streaming support helped GA convert their analytics infrastructure to wholly real-time.

“Because Imply has everything we need built-in, we can focus on making features that bring value to our customers instead of non-product related work,” CTO Ramon Lastres Guerrero explains.

After switching to Druid, the GA environment ingests 25 billion events daily, with up to 100,000 queries per hour at its peak. Response times are also very fast, averaging 600 milliseconds (though up to 98% of queries take 450 milliseconds) to complete.

In addition, GA has used Druid to shrink their data footprint and improve cost efficiency. By using ROLLUP to compress data, the GA team saw a tenfold decrease in the number of rows when compared to raw data. In some use cases, GA engineers removed a number of dimensions before rolling up their data, achieving up to a thousandfold reduction in raw data.

To learn more, read this guest blog by CTO Ramon Lastres Guerrero, or watch this video presentation.