Druid is designed to be highly available, with zero need for planned outages and high durability. But computer hardware always fails eventually. Ensuring a highly available cluster isn’t difficult, but does require some work.

Designing for High Availability

There are 4 important considerations when designing Druid for high availability: multi-node cluster design, deep storage, protecting the metadata, and clustering Zookeeper. Let’s explore each of these.

1. Multi-Node Cluster

While you can run Druid on a laptop (and many do so as a development instance), a highly available deployment requires a cluster of multiple nodes. Druid is a collection of processes, which are commonly deployed grouped together:

Master Node = overlord processes and coordinator processes

Only need one of these but it must be running. If you have two identical master nodes, each connected to the same Zookeeper cluster and deep storage, they will automatically serve as a failover cluster. All work will be executed by one node, with the other node automatically sending requests to the first, while automatically taking over in the event of a failure.

Query Node = broker processes and router processes

Any number of these can be in the cluster, and all will be active and queryable. Deploy as many as needed for performance, plus one to provide extra capacity when one fails (or when you want to take one offline for a rolling upgrade). For simpler operations, it’s a good idea to place all query nodes behind a load balancer (which should, itself, be configured for high availability).

Data Node = middle manager processes and historical processes

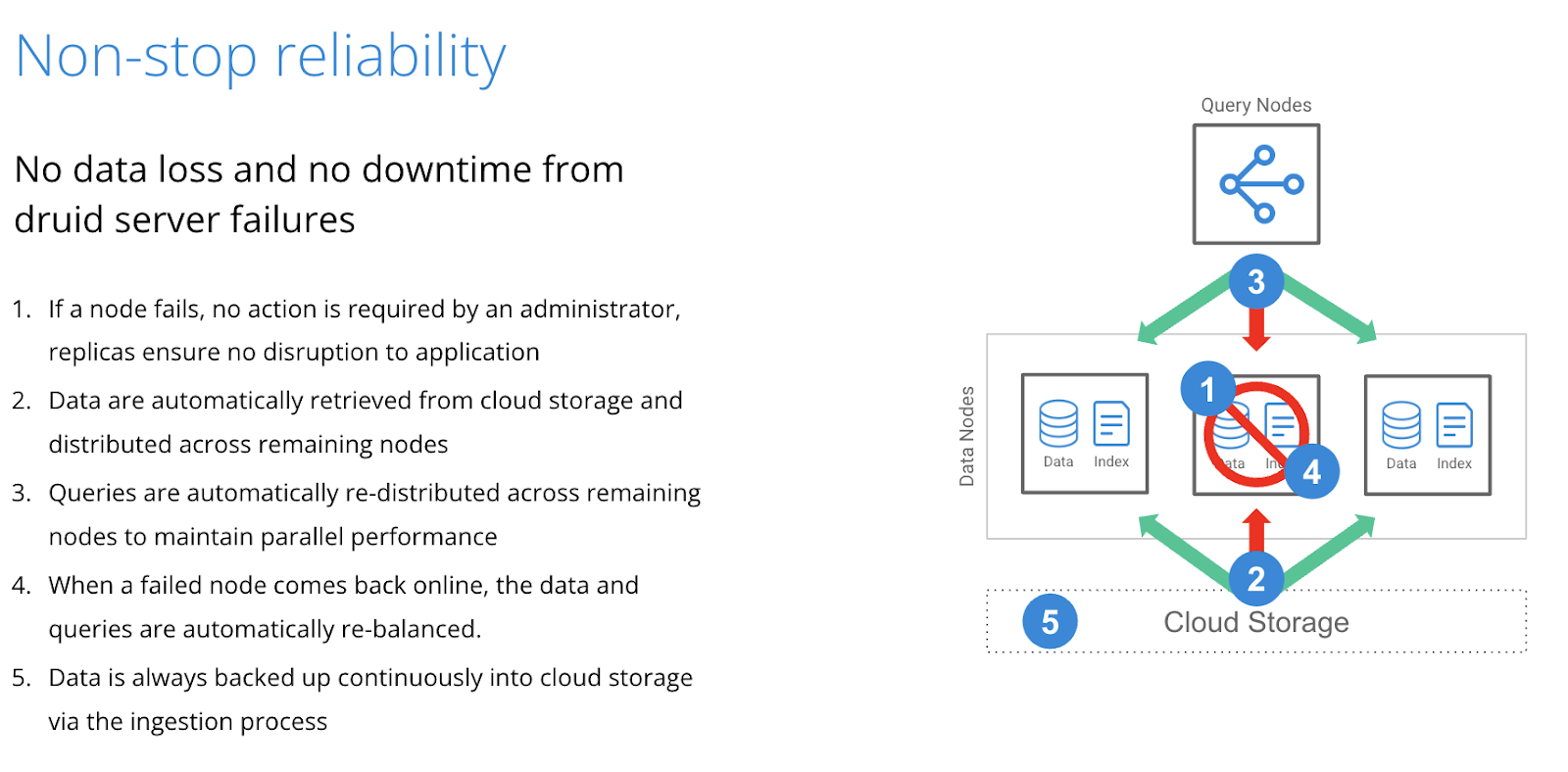

Any number of these can be in the cluster, and Druid will automatically distribute data segments and ingestions tasks. By default, each segment will be placed on 2 different nodes. When the number of nodes change (whether because of a failure of deliberate adding or removing of nodes from the cluster), Druid will automatically rebalance segment placement as a background process. Your cluster needs as many data nodes as are needed for performance plus one.

Each of these nodes can be deployed directly to a Linux operating system (usually using a virtual machine) or as a container (optionally managed by kubernetes). Whether VMs or containers, the considerations for high availability are the same.

2. Deep Storage

In addition to copies stored on data nodes, Druid also keeps a copy of each segment in durable deep storage. If you’re running on cloud computing, this is an object store (such as Amazon S3, Azure Blob Storage, or Google Cloud Storage), which are inherently multi-location and highly durable. If you’re running on-premise, you’ll need to configure HDFS- or S3- compatible storage in a durable configuration.

3. Protect the Metadata

Druid stores metadata in a small RDBMS (usually MBs in size and never more than a few GBs), using either MySQL or PostgreSQL. If you’re running on cloud computing, use a high-availability database service (such as Amazon RDS, Azure PostgreSQL, or Google Cloud SQL); otherwise, be sure to create a highly-available database configuration. This is usually an active-passive cluster, where all work is executed on the active server and copied to the passive server; when the active server fails, the passive server is promoted to be active and work continues.

4. Cluster the Zookeeper

Apache Zookeeper® is used to keep some of Druid’s configuration data. The Zookeeper should be deployed on its own cluster of 3-5 servers. The Zookeeper cluster should be spread across three different data centers or cloud availability zones.

One common choice is to co-deploy master nodes and zookeeper on the same systems. In this case, the same servers can safely and reliably run both the master node processes and Zookeeper.

Surviving Failures

There are three major categories of failure, of increasing impact and decreasing frequency: server failure, data center failure, and regional failure.

Server failure will happen, eventually, to every server. Amazon Web Services, for example, offers a 99.5% service level target for each EC2 instance – which means you can expect up to 36 hours of downtime for each virtual machine each year!

Fortunately, server failure will not cause a Druid outage if the guidelines above are followed.

- When a query node fails, queries that were in-process will automatically resubmit, while all future queries will be directed to other query nodes

- When a data node fails, Druid will redirect active queries to other nodes with copies of segments-under-query, while automatically rebalancing the placement of segments across the cluster, copying segments from deep storage to data nodes if needed

- When the active master node fails, another master node in the cluster will automatically take over all tasks

- When a Zookeeper server fails, other servers in the cluster will automatically take over all tasks

- When a metadata active server fails, the database automatically promotes a passive service to become active and work continues

After the failure, you can keep running with less hardware or, whenever it’s convenient for you, add more hardware to the cluster. Except for the active-passive metadata servers, no “failback” is ever needed in Druid – the new systems just become part of the cluster and are ready for the next failure.

Data center failure is fairly rare. Usually, it’s a network failure, but could be a fire, flood, or seismic event.

If you’re running on a cloud provider with multiple availability zones, such as AWS, Azure (in most regions), or GCP, then you are already protected against data center failures, as long as your metadata server cluster and Zookeeper are configured to span across more than one availability zone. Your deep storage in the cloud is already copied across multiple availability zones.

In the event of a cloud data center failure (which might, or might not also be an availability zone failure), no data will be lost as long as your deep storage, metadata, and Zookeeper data sets are protected. You can provision and stand up new servers in a surviving availability zone, usually in a few minutes, and continue your Druid work.

If you are not deployed on a cloud with multiple availability zones, you’ll need to do more, and protect against data center failure as if it were regional failure.

Regional failure is extremely rare. For a cloud region, it requires multiple concurrent failures across a broad geographic area – but it has happened. It’s a bit more common for on-premise environments, especially if the “region” is only a single data center.

Protecting Druid against a regional failure requires replicating deep storage to a different region, such as replicating S3 buckets in aws-east-1 (N Virginia) to aws-west-2 (Oregon). This is fairly inexpensive, and after a regional failure, the Druid cluster can be rebuilt from the replicated data, though a small amount data that was in-flight at the time of the failure may be lost.

Architecture

Architecture Deployment

Deployment Ingestion

Ingestion Modeling

Modeling Operations

Operations Development

Development