We call them modern analytics applications: apps that serve real-time analytics to hundreds or thousands of concurrent users on streaming (as well as batch) data. They’re the apps developed at Netflix, Twitter, Confluent, Salesforce, and 1000s of others that play a key role in their businesses.

So what do these apps actually do? And why don’t data warehouses or the other 300+ databases out there fit the bill? Let’s look at what devs are trying to build in these apps and why they turn to Druid. These include:

- Operational visibility at scale

- Customer-facing analytics

- Rapid-drill down exploration

- Real-time decisioning

Operational visibility at scale

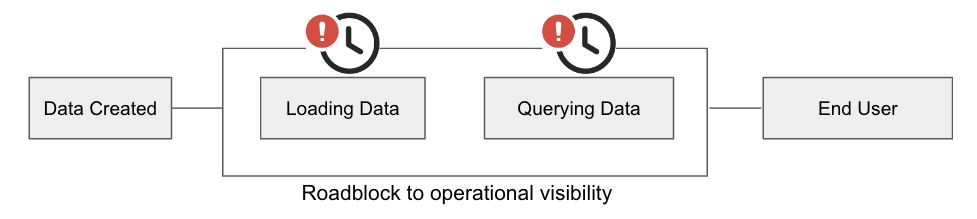

Devs building apps for operational visibility (e.g. observability, product analytics, digital operations, IoT, and fraud detection) are tasked with closing the gap between events created and time-to-insight.

Now technology-wise, at the surface there are several databases that can analyze events in real-time. These include stream processors like Apache Flink or ksqlDB, time-series databases like InfluxDB or TimeScaleDB or even key-value stores like Redis. But all of these technologies have the same Achilles’ heel: they can’t do OLAP-style queries well at any meaningful scale.

| Stream Processors | Time-Series DBs | Key-Value Stores | |

| Real-time alerts | X | X | X |

| Basic SELECT queries | X | X | X |

| Complex OLAP queries | – | – | – |

| Sub-second response at scale | – | – | – |

Let’s say the app calls for analyzing time and non-time-based dimensions over the past month (assume ingestion was 1 million events per second, that’d be 2.6+ trillion events). Any database that supports stream ingestion can query the current status (setting aside the challenge to handle ingestion at this scale) and to a degree simple aggregation of numbers and counters. But if the app requires aggregation across all of these events, GROUP BY on non-time-based attributes, or high concurrency, then the wrong database = spinning wheel of death as people sit and sit waiting for results.

That’s why devs turn to Apache Druid. Salesforce engineers built an analytics app using Druid to monitor their product experience. The app enables engineers, product owners, and customer service reps to query any combination of dimensions, filters, and aggregations on real-time logs for performance analysis, trend analysis, and troubleshooting – query results returning in seconds with billions to trillions of streaming events ingested every day.

Customer-facing analytics

Atlassian, Forescout, and Twitter are seemingly unrelated but they have one thing in common. They see analytics for more than internal decision-making. They’re giving their customers insights as part of a value-added service or a core product offering entirely.

When building analytics for external users, a whole different set of tech challenges need to be addressed. Concurrency is a clear top factor. Not only should the database support current and future user growth with ease, but concurrency has to be addressed economically or the infrastructure cost will go through the roof.

Another key factor is sub-second query response for an interactive experience. External users are typically paying customers and they don’t want to wait for queries to process. Instead of fighting PostgreSQL or Hive or any other analytics database to meet the performance reqs at scale, Druid’s the easy answer.

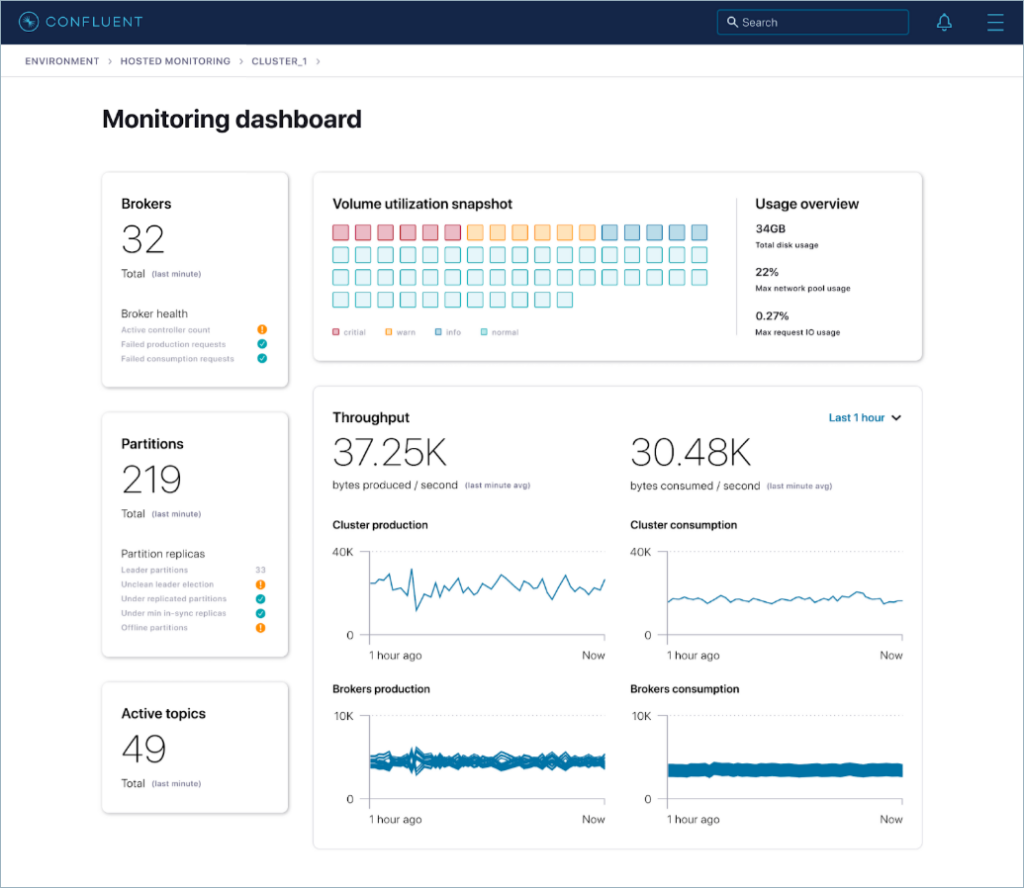

Confluent, for example, built Confluent Health+ (their cloud-based monitoring service) with Druid. They originally built their app with a different database, and in their words, “as the volume of data grew, our legacy pipeline struggled to keep up with our data ingestion and query loads”. And now with Druid, they’re able to deliver that great user experience every dev wants to build – and do it with ease.

Rapid drill-down exploration

A report simply reports (e.g. what is the top selling product, what is the average customer’s age). But the real gem is understanding why something happened to either solve a problem in the present, or anticipate it happening again in the future.

Answering ‘why’ requires slicing and dicing data to explore and find root causes, at lightning speed. So when a person asks a question, they get the answer in less than a second. This is easy when the data set is small. But when dealing with data from cloud services, clicks, and IoT/telemetry, the underlying database has to correlate a few to hundreds of dimensions across highly cardinal data with billions to trillions of rows. That’s not so easy.

With data at this scale, full table scans take too long and the typical query-shaping techniques used to speed up performance have expensive tradeoffs.

| Performance Technique | Tradeoff |

|---|---|

| Analyze recent data only | Misses the complete story |

| Precompute all the queries | Expensive and inflexible |

| Analyze roll-up aggregations | Can’t drill down into the data |

But with the right database it’s easy to build an interactive data experience without any tradeoffs. For example, developers at HUMAN built an analytics app for internet bot detection using Apache Druid with Imply. Prior to Imply, their cloud data warehouse was too slow to keep up with their need to drill into massive web traffic quickly. Now, the app gives their data scientists the ability to instantly aggregate and filter across trillions of digits events, so they can classify anomalies and train ML models to detect real-time malicious activity.

Finding the needle in the haystack is hard enough. But with Apache Druid it’s easy to investigate behavior, diagnose problems, and enable an interactive data experience for any amount of data.

Real-time decisioning

Lastly, when people think of analytics, what comes to mind is a synthesized data set presented via a UI like a chart, graph, report, dashboard etc where a person comprehends it and then takes action. But sometimes the user just wants to know what the data means and what they should do about it – or the decision needs to be made so quickly that people themselves become a bottleneck. Some decisions are too fast for humans.

Think Google Maps and how it optimizes routing based on real-time and historical traffic flow and patterns – or real-time ad placement within milliseconds of a new visitor. There’s no data viz to interpret. Those use automated decisions derived by inference and that’s a sweet spot for Druid.

A key reason Netflix constantly delivers a great streaming experience is through an optimized, automated content delivery network. Netflix engineers have built an analytics application with Druid that ingests 2 million events per second packed with filters, aggregations, and group-by queries that infers anomalies within their infrastructure, endpoint activity, and content flow across a data set of over 1.5 trillion rows – all to optimize content delivery.

Devs choose Druid for powering inference in apps – including diagnostics, recommendations, and automated decisions – when the optimal answer requires instant query response of high cardinality, highly dimensional event data at scale. The speed and data freshness of a Druid query result feeds into rules engines and ML frameworks for real-time decisioning. If the app is for updating merchandise pricing once a day – Druid might be overkill. But if the app needs real-time decisioning – then Druid can’t be beat.

Conclusion

The database market is crowded, but the best ones are purpose-built for specific use cases. In the case of Apache Druid, it’s the best choice for use cases that need an interactive experience querying lots of real-time and historical data for lots of users.

If this sounds like something you’re trying to build, you should read this white paper on Druid Architecture & Concepts and definitely try a free trial of Imply Polaris, the cloud database built for Druid.

© 2022 Imply. All rights reserved. Imply and the Imply logo, are trademarks of Imply Data, Inc. in the U.S. and/or other countries. Apache Kafka, Apache Druid, Druid and the Druid logo are either registered trademarks or trademarks of the Apache Software Foundation in the USA and/or other countries. All other marks and logos are the property of their respective owners.

Architecture

Architecture Deployment

Deployment Ingestion

Ingestion Modeling

Modeling Operations

Operations Development

Development